This revision is from 2024/07/07 17:52. You can Restore it.

Three major issues with O.I...

- Lifespan: while non-dviding cells are potentially immortal, current lifespan only about 100 days.

- Size: they are too small, only about a grain of slat. The bigger the organoid the more intelligence.

- Communication: the input output system and effective training and communication.

Electrophysiology in O.I. is the study of neuron electrical system for the result that communication methods can be formed. The two known communication systems in the human body, chemical and electrical. While humans have five senses, the basic sense of a neuron is the electrical gradient (its associated field) and about 100 chemicals such as dopamine called neurotransmitters. The language changes the mode of the cell, initiates functions from its DNA manifold.

Each time we communicate with a neuron we are forming a sense for the neuron. The human bodies 5 senses are multi-modal, general, contrast, a specialized sense such as flying a plane, solving puzzles or playing a video game.

This work on electrophysiology is essential, some of the most basic animals sense their surroundings by electrical discharge on contact, maintaining a basic voltage with any discharge meaning another object has touched or is near. Another is seeing with magnetism. Neurons maintain an electrical difference that when fluctuated cause the neuron to act. Commmunication with a neuron is a change in the electrical gradient, polarization of the cell and that causes a neurotramitter to be released where onwards if causes some reponse. Action potentials, resting membrane potential, depolarization, repolarization, refractory period...

The operator stimulates the neuron gradient, action potentials to cause the release of neurotransmitters relative to an aim.

action potential → neurotransmitter → a serotonergic neuron will release serotonin, while a dopaminergic neuron will release dopamine. The action potential provides the "go" signal. The different specializations of neurons communicate an outcome. For instance, if a dopamine neuron is triggered, it means success, while if a serotonin neuron is fired it means fail.

- Reward: Dopamine

- Punishment: Serotonin, perhaps Norepinephrine.

There are over 100-150 known neurotransmitters, narrowing it down to two is a punk out. Some others... acetylcholine (ACh), norepinephrine (NE), GABA (gamma-aminobutyric acid), glutamate, endorphins, histamine, melatonin, adrenaline (epinephrine)...

There are many neurons relative to their task such as visual cortex neurons, auditory cortex neurons, neo-cortex neurons, Von Economo neuron (VEN)...

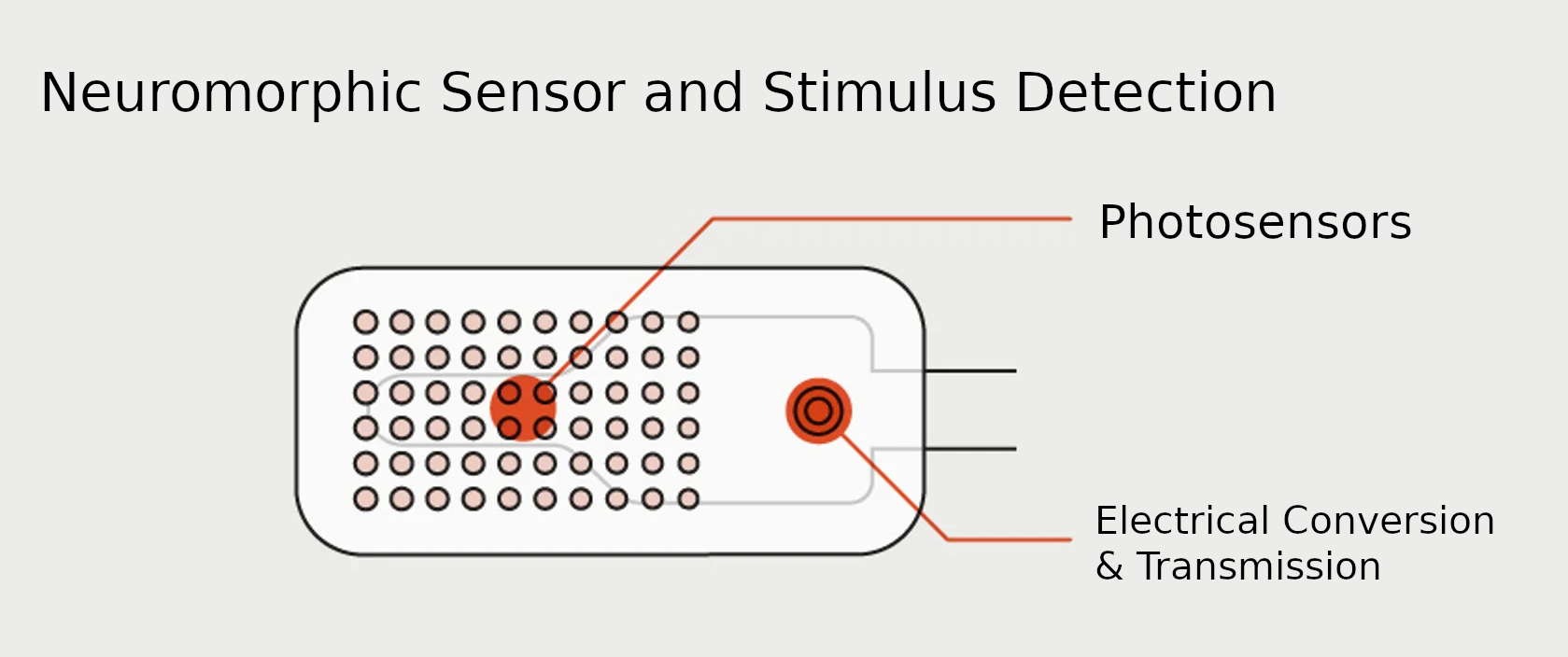

One sensor goes to one neuron type...

one stimuli → sensor -> wire -> specific neuron -> onwards to other specializations (prep, prime and act).

Take fight or flight, a stimulus is sensed, and an action potential is sent down the wire specifically to neuron that release norepinephrine. Onwards, the communication propagates to the brain and the system is primed for fight or flight. If it takes down the animals and feeds, a new sense, an unassociated process will trigger dopamine in the brain and behavior re-enforced.

Take a feedback loop, levels rise and a sensor is activated and triggers hunger, teaching the action of feeding which brings levels back down turning off the sensor and providing dopamine.

The simplest circuit is a senseor dedicated to a single type of stimuli and the triggers a specific types of neurons. Establishing an input, processing and output.

1 stimulus is connected to 1 sensor and always goes to the same group of neurons. The design relies on re-enforcement learning, operant conditioning. If the neurons exhibit the desired output, it gets a reward such as dopamine or a punishment such as serotonin. You do not have to starve the organoid of new and more sensors, information.

The method of induced pluripotent stem cells (iPSCs) selection of differentiation into a specific neuron type.

O.I. and Building Multi-Modal Sensory Systems

At some stage, multi-modal senses are required because most applications could fall into a general permanent sense. A repeatable organoid construction could facilitate most applications. This is far cry, as organoids are only about the size of a grain of salt at the moment, only capable of operant conditioning. Organoids can always be more effective using modularization, with a model of the brain tanslated into O.I, with regional, partitioned function of a single organoid or multiple specialized organoids interconnected via an artificial synapse. Organoids need to get more complex and larger.

Propose 4 senses, two input and two output.

These senses are electrode arrays that are converted to an electrical form and sent to the organoid. These peripherals are not biological, they are electronic. The 4 senses...

Input

IMMORTALITY

IMMORTALITY