O.I. Electrophysiology

This revision is from 2024/07/09 00:53. You can Restore it.

Three major issues with O.I...

- Lifespan: while non-dviding cells are potentially immortal, current lifespan only about 100 days.

- Size: they are too small, only about a grain of salt. The bigger the organoid the more intelligence.

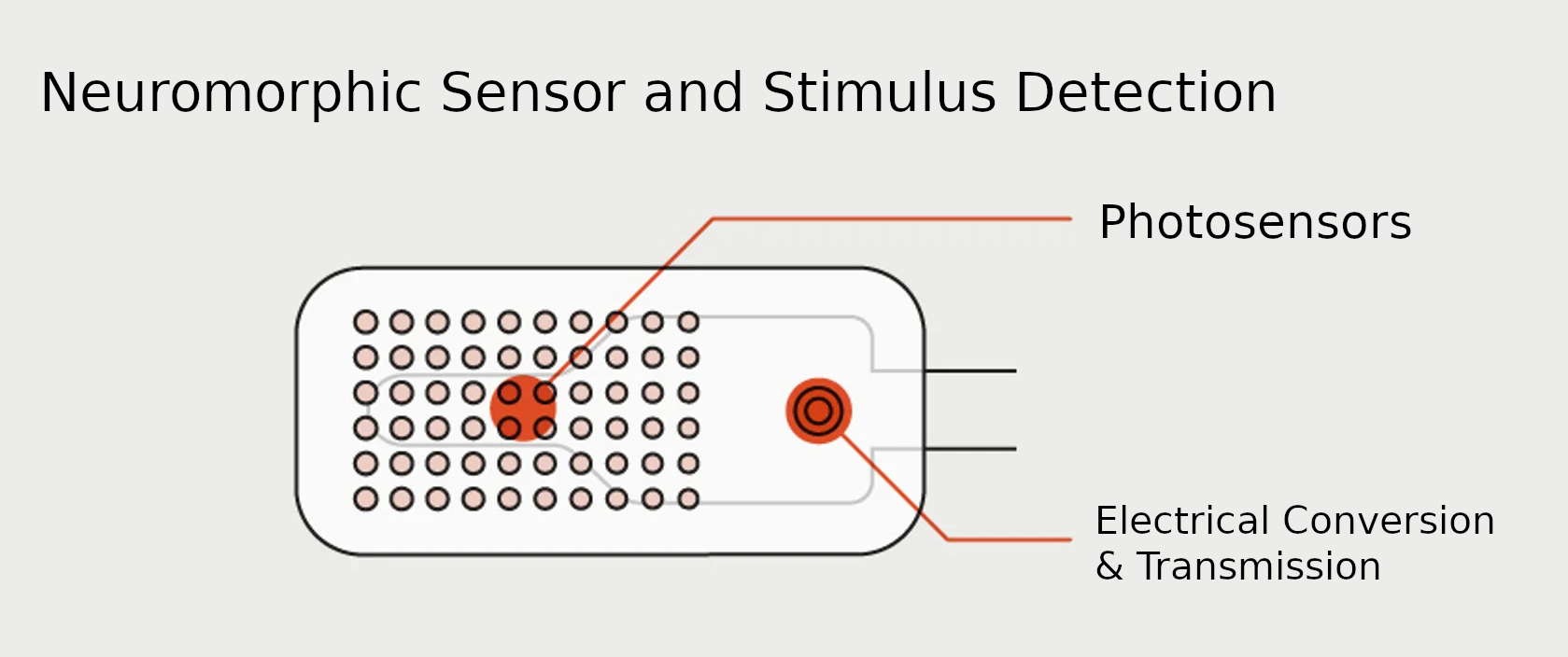

- Communication: the input, output system and effective training and communication.

Electrophysiology in O.I. is the study of neuron's electrical system for the result that communication methods can be formed. The two known communication systems in the human body, chemical and electrical. While humans have five senses, the basic sense of a neuron is the electrical gradient (its associated field) and about 100 chemicals such as dopamine called neurotransmitters. The language changes the mode of the cell, initiates functions from its DNA manifold.

Each time we communicate with a neuron we are forming a sense for the neuron. The human body's 5 senses are multi-modal, general, contrast, a specialized sense such as flying a plane, solving puzzles or playing a video game.

Work on electrophysiology is essential, some of the most basic animals sense their surroundings by electrical discharge on contact, maintaining a basic voltage with any discharge meaning another object has touched or is near, another is seeing with magnetism.

Neurons maintain an electrical difference, commmunication with a neuron is a spike in the electrical gradient, polarization of the cell and that causes a neurotramitter to be released where onwards if causes some response. Action potentials, resting membrane potential, depolarization, repolarization, refractory period...

The most resonating example is fight or flight, a stimulus is detected by a sensor, triggers an action potential that sends a signal to the brain, which then releases norepinephrine, priming the body into fight or flight. If the response is successful, such as catching prey, eating causes a new stimulus to be generated, which triggers the release of dopamine in the brain, reinforcing the behavior and creating a positive association. This cycle of stimulus, response, and reward helps to solidify the behavior, making it more likely to occur in similar situations in the future.

The most common circuit in the human body is the feedback loop, homeostasis, for instance let's look at hunger. Hunger creates an impetus, a challenge is associated with resolving the impetus, such as eating resolves the hunger condition. Upon feeding, sensors resolve the impetus and also send stimulus to the brain of success. Not forgetting, freedom of plasticity in the human body means tools can be formed for the challenge, such as hands. Levels rise triggering a sensor such as hunger, levels go back down turning off the sensor.

Between impetus, challenge, resolution, success on dopamine or serotonin on fail. The brain orientates itself towards getting the dopamine efficiently.

The operator stimulates the neuron gradient, action potentials to cause the release of neurotransmitters relative to an aim.

In the hunger example: the impetus is provided by ghrelin. Neuropeptide Y (NPY) and agouti-related peptide (AgRP) are neurotransmitters that stimulate appetite and increase food intake. Stretch receptors: The stomach has stretch receptors that detect the presence or absence of food. When the stomach is empty, these receptors send signals to the brain, which interprets them as hunger. Other receptors send information to the brain when food intake is detected. Injecting a challenge is important.

- Reward: Dopamine

- Punishment: Serotonin, perhaps Norepinephrine.

IMMORTALITY

IMMORTALITY