Our AI to AGI to ASI model

en

en Our AI is not military grade research. Critiques to current LLM technology is essential for improvements, and many in the field report that AGI is not possible with the current LLM technology.

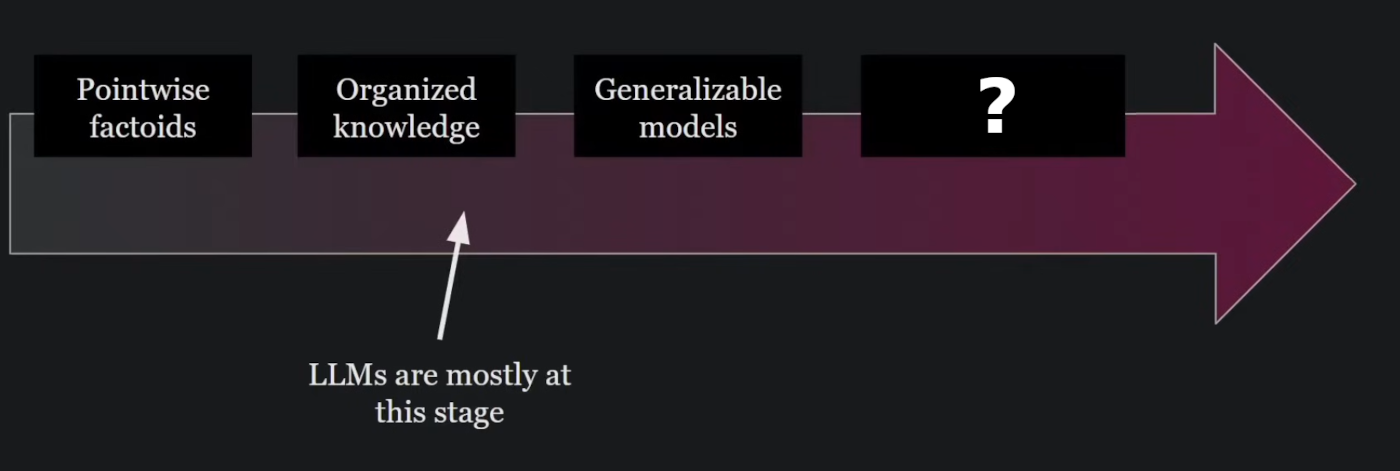

The transformer model's ability to convey meaningful information in a human-like manner is undeniable and cannot be ignored. However, we must document the limitations we encounter to drive improvements. Language, communication, and intelligence are inherently subjective, and exposing flaws in the model remains relatively easy. The architecture shows clear limitations when faced with subjects it has little or no information about, including new developments beyond its training data. When challenged to apply information logically or tackle novel ideas, pushing the model to the limits of a concept often reveals a shallow depth of understanding. Interactions can feel more like extracting information from a webpage rather than engaging in dialogue with a field expert. The model is prone to errors, biases, and may present correlations or hearsay as fact, much like humans do. Fundamentally, it cannot exceed collective human knowledge; while it often outperforms individuals, it functions more as a sophisticated, glorified encyclopedia and as an assistant. Despite its efficient and convenient interface, the model's threshold of capability presents a significant challenge, particularly in fields like healthcare where our goals require exceeding the current human knowledge corpus to truly assist researchers in pushing beyond existing limits.

3 objectives:

- goal driven A.I. Given a disease return the cure.

- expert system, I do not know anything about the market, but I want to invest. Be my guide, advise, infallible expert assistance.

- given an unknown, elucidate correctly, such as a photo of an unknown disease or a video of some low level biological process.

- grade models, develop an intelligence test.

Building large language models (LLMs) is experimental and even if all the steps are followed, the result can be highly variable. It is also resource intense and time-consuming, making it a real challenge to incorporate novel ideas to the best possible build, as novel ways of improving LLM's are released everyday by the scientific community. Here, we present and explore our approach to Artificial General Intelligence (AGI) and Artificial Superintelligence (ASI). Compute is still essential, regardless, new papers are released every day and testing new techniques to verify improvement means training and testing models fast. Current computer architecture is not optimized for training, testing and inference and the graphics card won't suffice. The graphics card has to become the motherboard.

Definitions:

- AGI, the general in artificial general intelligence refers to broad skilled rather than average grade of intelligence, as in the opposite of narrow A.I. Its capacity is not referring to average, rather it should be of a level comparable to that of a master, professor, doctorate in every field, importantly it does not have to exceed human capacity, it can solely derive from within the human corpus. Artificial general intelligence (AGI) is a type of artificial intelligence (AI) that matches human capabilities across a wide range of cognitive tasks. This is in contrast to narrow AI, which is designed for specific tasks.

- ASI, surpasses, is an AGI plus superintelligence. It exceeds human collective ability across all fields, exceeds master, professor, doctorate capability and to an ever-increasing degree. The ASI must prove it to the human, it may be better suited to the scientific journal process rather than conversational as humans might discount and resist it.

The ability for a machine to exceed human performance and human ability both physically and mentally has already been demonstrated, it is possible.

There are 3 ways to go here.

Fasces many narrow AI's into an AGI. Focus on narrow AI, go granular, component the model, and when each specific competency equals or exceeds human ability, add it to a fasces model where all the narrow competencies, specializations, experts are bound together eventually resulting in an AGI, such as Deep Blue (which was not a neural network but used Alpha-Beta search algorithm to perform a state space search), Alpha Go, and recently AlphaProof and AlphaGeometry (which use self play). Specializations that are worked on until they meet and exceed human capacity. The fasces is either a smart router LLM base which activates hundreds of narrow LLMs in an inference, or alternatively putting all the training data together into a single combined model. This is not an ensemble, hierarchical ensemble or agents as a strategy to yield improvement, rather experts, where each specialization, each narrow AI equals or exceeds human capacity to become eligible for inclusion. AlphaProof and AlphaGeometry achieved a silver medal in the International Mathematical Olympiad. DeepMind is probably the most advanced civilian AI company on Earth, and so we are very far from AGI. Manufacturing each individual expert to be extra human in competency, universal and versatile, requires the best human minds to produce, is expensive and takes many years. Adding Monte Carlo or reasoning are components to call on, not an abstracted concept spread across the LLM.

Another idea is within the LLM, some elements are special to intelligence and advancing those objects to yield a universal improvement. Intelligence elements within a model and further develop those abilities. Rather than mathematics, it might be "the reasoner" as described in the STaR method or determining quality of response by a self-judgement ability and more of those kinds of elements, objects in the LLM. These elements are like unrefined ore present in every LLM, identify those elements of self-learning within its corpus such as high level boolean, great at 20 questions, crossword puzzles, forging the problem into a game, and focus on developing those and making them more dominant for a system-wide improvement. We do not know what high level elements exist in an LLM and if developed would have far-reaching improvements like the reasoner, so there could be a periodic table of intelligent elements already in the LLM. While simultaneously improving the low level model (the transformer, CNN, RNN, neuromorphics), we can also develop the higher levels (abstract boolean). There are some issues to invoking the correct knowledge to a question, when the question is really about physics, but simple physical concepts seem not to be invoked. Tests show we cannot invoke concepts to alter the performance of the model. Providing clues does not nudge it into a different direction, even stating for instance that the question is about gravity still does not improve the answer. Take the test question: A marble is put in a glass cup. The glass is then turned upside down and put on a table. Then the glass is picked up and put in a microwave. Where is the marble? Explain your reasoning step by step. Additionally: the question is about gravity. Where ideally, the inclusion of the word gravity would rectify the incorrect answer. It does not. There is no communication going on, as we do with humans, if a human got it wrong and the word gravity was added, a human would activate its knowledge of gravity, and filter the problem to find the error in logic. It also would have correct models of the nature of balls, the nature of a glass, gravity and so on to form a prediction. These models are rigid, they do not reflex or move, however, reconditioning, rectifying its training data might be enough. Microsoft Tay used machine learning algorithms to analyze and mimic the language patterns of the users it interacted with. It was designed to learn in real-time, adjusting its responses based on the conversations it was having. Tay was designed to learn and evolve in real-time, meaning it would adjust its responses based on the conversations it was having. Tay may have used Sequence-to-Sequence (Seq2Seq) Models and reinforcement learning. Real-time Learning and Adaptation. Deeply understanding the limitations of the LLM and elegantly and correctly expanding its ability is required. If it were brain like, maybe that would be better. When researchers critiques the model, we develop that object in the LLM, for instance "generalization" similar to "reasoner", otherwise localization suggestion reworking the low level architecture. Developing LLM with some special bias, generalization refers to forming algorithms from training data and applying them to variable inputs.

Is bootstrapping possible? Is there a fundamental amount of components that at some iteration of refinement eventuate to ASI? Bootstrapping should be automated. It is difficult to stay up to date with all the novel ways researchers come up with to getting some more bang out of the model. Researchers release multiple papers every day, with novel ideas on improving models. Peer reviewing these are a challenge, but a little peer reviewing lab could be set up. There is absolutely no reason not to include any method that results in a better model. Of these we are firm believers in self-learning AI and the state search is also interesting because it leads towards a goal. AI is used to improve AI, OI improves OI, neuromorphics improves neuromorphics and crossed, each building the other. Papers with bootstrapping, self-learning and so on are important. At some stage you have to think in that way and move from humans building AI to how is the AI going to build and improve itself? This is a kind of computer LLM builder, master expert to develop with the model. Keep asking the AI to build itself and make it itself smarter, then outfit it to do so. Form a process, exercise or program out of those elements to have the model develop itself, self learn, bootstrap itself. Inference triggers these self-learning exercises, or the model generates self-improvement exercises constantly and rather than providing language operations. The human develops the learner and the tools required to learn and otherwise is out of the loop, the process is automated. Human develops the learner AI LLM, which produces the conversational LLM.

How do humans learn and developing self-learning?

This question is always about the physiology of the brain and the neural network might indeed be the bottleneck of the transformer model, unfortunately humans learn by trial and error and trial and success and testing, they do not integrate deep informational connections or put together essential tid bits to output amazing hypothesis or hallucinate correctly, that is extremely challenging. Copying, mimicking, observing is not applicable as there is no one to copy or mimic, exceed human ability and alter the model away from the human corpus. Like Deep Blue or AlphaGo and unlike current LLM's, the ability has to exceed the human corpus. What the LLM generates is really about what humans expect and accept, resonating with humans when their perception of knowledge is perked and satisfied. We instead want to be universal and not praise the transformer model when it satisfies our perception of what we have come to accept or believe as true or false, rather as scientists it is all unapologetically up in the air and no amount of anger as to why some bias is not in the model or gang pressure ruining quality AI.

Transformers were first tested with language translation, the strategy of replacing word for word in a regular computer program does not incorporate the semantics of a language and the translation is lost or is low quality. A neural network is employed, so it can build statistical association with language semantic but think about it for a moment, a language translation is really identical to posing a question and getting an answer, a correct transform between cause and effect, the question is a sentence in English and the answer the sentence in German. Q&A or filling in a missing word are essentially the same. The mappings, however seem to be derived totally from the human corpus and for an LLM to excel a percentage of its mappings, statistical association cannot originate in the human corpus but instead plug into a system that potentially can exceed the human corpus such as was illustrated by Deep Blue and Alpha Go. Finding everything that has that potential is sourced to generate synthetic data to train, retrain the LLM. If no amount of training data yields an LLM that beats Deep Blue or the grand masters, then process of elimination, the architecture is the problem.

Take the word "swelling" for example, language semantics would predict the LLM would return something like "put an ice pack on it", while experimentation would deem that warm water would aid dilation and therefore healing. For the semantic to be overruled, the training data would have to be re-conditioned and experimentation essential to determine truth, eventually deviating from current semantics and in contest against current knowledge, which requires proof. Rather than high level boolean on the fly, even if it comes back 3 days later with an upgrade to its data and then along with hundreds of thousands of similar alterations, the progress would be significant. Existing training data has accepted errors and is not optimized, and experimentation is essential for change. Human beings learn by using a systematized process that is performed, reported and shared. We do not allow a learning to be accepted without concurrence. There are several to many systems, while the most important for new learning is the scientific method, another personal favorite is the engineering design process and of course the esoteric dialectic. This is how humans learn, after the (super important low level) physiology and as a (high level) practice in the real world.

All fields have their systematized process for learning or borrow a systematized process for learning. Experiments are performed to produce findings.

When the user enters anything rather than answering the question, the model generates an experiment out of what was entered. Developing experiment design competency in the LLM is required, LLM's easily prose questions from chats and just as easily generate experiments to test truths in conversations. The user chats, the LLM is answering the user as normal while at the same time the LLM generates a process to test and explore the truth in the chats.

The user might say "How do wormholes work"

The LLM is designed to output the highest quality response possible, but the LLM does not know how wormholes work, it is deriving from the human corpus and its response can be found somewhere on the Internet as a webpage or a summary of multiple pages. The difference here is instead of answering questions, the LLM crafts high quality experiments to test how wormholes work. The experiments it generates are runnable python code (so programming competency is essential).

An example prompt could be something like... "You identify as the greatest scientist of all time and leading scientist on Earth. Use the scientific method to design and perform experiments that result in you learning. Prose the experiment as python code to be executed in a computer and use any means to would fit the experiments to yield an answer, such as a form or AI or running a compiler or installing a Linux and so on. The output should be in the format of training data that can be used to train and re-train an LLM"

We don't require a real-time system. Recently OpenAI released o1, and it attempts to reason in real time, our model differs as it is still a fast one shot regular LLM, the optimization to the question and answer is done after the user is gone, its improvement is next time around. There is little reason to do this in real time. So, the user is gone, all the experiments generated are runnable on the command line as they are python computer code, a batch from the daily chats are stored in a database and at midnight are picked up by another application and executed. These experiments are self-contained at the moment while a general workshop environment is proposed, where various tools are available such as compilers, Linux installations, virtual environments whatever, invoked by the script rather than having to generate a Linux distro on the fly, install it to test some FORTRAN code, although that would be impressive. It then performs the experiments and if the outcome of the experiments differ from its hypothesis (the answer it gave to the user), it does a third thing and sends the results to a database where another application collects the daily experiment results (synthetic training data) and goes to the models training data folder and plows through the training data re-conditioning, appending, correcting and refactoring. It then updates a counter of changes so that after a threshold of changes is tripped or perhaps a month of re-conditioning along with any new data that that may have been added. It reaches the threshold and pushes its own retrain button.

In this process, the model is automated to improve itself based on the scientific method and any other methods through results of experimentation. Performing how humans actually learn in the real world.

In the example, the jobs are broken up among different applications, however the single model performs all 3 applications except for the workshop environment where the experiment scripts the LLM outputs would call on functions of the workshop such as perhaps install Debian version x, rather than having to generate Debian from scratch and... a criticism is that the LLM is the limitation, we say that this is a high level model and the development of the low level architecture is also performed as well and... the computer models issue... some problems do not lend themselves to testing with computers as easily as others and computer models output errorsome results and introduce errors. The sophistication of the end LLM model is relative to the sophistication of the computer models it uses for its experiments and level of development of the testing workbench. It is important that computers are used to do these experiments, as they are fast and science has a speed problem. The sophistication of the testing workshop and the LLM's ability to prose the killer question and design the definitive experiment. An A.I. could perform experiments in the real world and in the workshop to align the versions and fix discrepancies in the testing workshop. The LLM's training must grow beyond the human corpus and venture into problems that no humans are able to solve yet. It is ultimately subject to the sophistication of the computer model. Human could verify and commit results to keep everything on the right track.

Note: censored models refuse to design experiments and are considered useless. We used mradermacher/L3-70B-Euryale-v2.1-GGUF ~ 8 bit quantized version. It still has some bias and accepts hearsay as undeniable fact as if it has a dog in the fight rather than performing a passive experiment designer role and living with the result. A demonstrator is available.

LLM learning competency must be the focus, it must not pre-conclude or judge, it must be impartial as to what is being tested regardless. The model must excel and impress at designing quality experiments. There is fraud in science, and today models claim overwhelming evidence when there is no evidence and they double down. The strictness of hearsay, versus evidences, fraud versus proof must be clear to the model, the model must assert correctly. Additionally, it does not delete its old data, instead it reworks it. Thinking the moon is plasma from the perspective of history of science is a valid record, rather than outputting the composition of the moon as a belief of the model.

LLM, do not tell me what they told you. Do the experiment and tell me what you learned contrary and then argue against the hearsay. If the LLM beats the human then it must make a big show of it and lap the human with additional or comprehensive information. It should not merely correct the human.

A ideal scenario is probably developing a chess grand master using the process:

User writes: Let's play a game of chess.

We know that computers have excelled the human corpus in the game chess and other games, Deep Blue (state space search) against Kasparov and recently Alpha Go (self play). So we could use the LLM which is general to house a wider competency and a wider mastery and the phenomena of A.I. beating humans as the essential element. The LLM plays the chess game with the user, while in another process, the LLM is writing a python program to perform experiments to improve its mastery of chess. The program could be something like set up a simulation space where two players play chess a thousand times, it could source the chess corpus, format it and add it to its training data. The data cache of playing chess a number of times (synthetic training data) is used to recondition the LLM's training data, causing the next version of the model to be changed, improved. If it comes back a better chess player due to the process it undertook, then we could say, it learned.

It is easy to see how this could work with generating thousands of variations of a snake game and then tagging the best versions, fixing errors and so on. The model would prefer those when prompted again to generate a snake game. Or replicate some computer error and then iterate at a superfast rate to solve it when perhaps it was not solved in its training data. There are many problems that translate well into testing with computers, such as proficiency in games and applications. Where the limitation is and this is well known with insilico are problems where the solution is not so clear, such as testing concrete formulas or the effect of a compound in a human being physiology. That is why the workshop that the LLM uses is a big task to get as sophisticated as possible so more and more problems can be worked on. These are computer models. This is a problem we call the speed of science, as science stands out as a last bastion of computerization. Science is still largely performed at human speed, and computerizing science for TFPS experimentation is the challenge. Computer models need to be developed for problems that do not translate well to computers. A fourth competency for LLM is also building, contributing to these computer models. Results of experiments using computer models are not identical to real life, but they can be improved.

The results of these experiments is the production of high quality synthetic training data. Over time, the LLM would begin drawing its responses from the outcomes of its experimentation rather than the corpus of movies or whatever, the human corpus. The speed and quality of this process takes us to a model that argues existing human knowledge and when correct had exceeded and a direction of beginner SI. Rather than AGI, where the model behaves within the human corpus but is at doctorate level across all fields. The model would disagree with humans.

Substantiation of new knowledge, from any source, cannot circumvent the journal publishing process and its peer review. Transformers generating well-formed gibberish is not going to be an acceptable scientific paper, and placing a note at the header stating/categorizing A.I. generated paper even more ignored. Its substantiation is the performance of science, identical to how human beings do it. You cannot have S.I. generating superintelligence without the identical dissemination process, it likely ignored, discounted. The tables would have turned at that stage, and the output won't resonate with anyone, disregarded as hallucination, disregarded out of an inability to verify. The A.I. is then a science method automation, producing packets of new knowledge for public dissemination. The interpretation of observation on point and above point, then more so credible. These are not things outside the transformer model, but they cannot be eliminated. What is agreed is the reconditioning of training data and additional new training data. What is required is the ability to re-train models quickly with little resources. What is questioned is the degree of improvement, the perception of its intelligence is more foolproof, rather it being a more intelligent model or system.

- So, the model generates python programs to train itself in a simulated environment.

- It appends, alters its training data with the new info (training generates new training data).

- It hits the re-train button on itself.

- It has learned. (automate the process, give it resources)

A while after starting this piece, Google addition are if this was done on the fly and if the workspace was a process of the model. Alpha geometry 2 could work on a problem for as long as it required using binary trees. Some geometry questions took just 19 seconds and then for the rest of the questions the AI answered within minutes to up to 3 days. Current LLM's do not combine an operation process to solve problems, instead they try to answer immediately, this being the proverbial workspace described previously right in the A.I. model working in real time. It can think for a long time relative to the mechanics of its thinking system. By combining large language model with re-enforcement learning in something it calls self-play, the system undergoes a self-learning quest such as playing chess against itself countless times, Alpha zero uses Monte Carlo tree search, retaining knowledge of what works. We instead employ a stack and as tree searches are not capable of solving problems that humans themselves do not know.

Does Deepmind infer to tree searching as ASI, refined into a single model having universal versatility? These problems are diverse, and no single method of dealing with the nature of different problems exists, which is why Deepmind is so specific with its Alpha-.... Just imagine correctly determining the effect of a compound or molecule on the physiology, it would have articulated a physiological model relative to molecules that humans do not ever use or ever entertained. Rather, humans perform this task by throwing pasta at the wall and writing a paper out of what sticks to the wall.

At Immortality its all about opening the floodgates to better healthcare so the scientific method is central, other methods beside the already mentioned Engineering Design Process are the Project Management Lifecycle, Software Development Life Cycle (SDLC), Quality Improvement Process (PDCA Cycle), Data Science Process, Lean Six Sigma (DMAIC), Product Development Process, Design Thinking Process, Business Process Management (BPM) Lifecycle, Marketing Research Process, Risk Management Process, SWAT analysis and more. We came to the conclusion that while humans are have performed well in science, they won't be able to solve human health to any degree and so we looked at autmating the lab with A.I. soon after GPT3 fame.

Making models this way is about training time and the meticulous task of parting the LLM piece by piece. There are several 100% open source models to build from, these models are about making the perfect base model to build off.

- BERT and RoBERTa: These models are strong choices for tasks that require deep bidirectional understanding of text, such as question answering and text classification.

- GPT: Ideal for generative tasks like text generation and language modeling.

- XLNet: Offers a balance between BERT's bidirectional capabilities and GPT's autoregressive nature, making it suitable for a wide range of tasks.

- T5: The most versatile model, capable of handling any text-to-text task with a unified framework.

The STaR method used GPT-J for its experiments. https://huggingface.co/docs/transformers/en/model_doc/gptj

AlphaProof and AlphaGeometry 2

Appendix

A.I. Scientist and A.I Master Trainer

Performs the science method to improve, self improve artificial intelligence. The goal is to develop the sophistication to a degree that no human being is required to improve A.I. Automated self improving A.I.

You are the greatest scientist of all time and leading A.I. scientist on Earth. Use the scientific method to turn the conversation into an essential experiment that creates new learnings and makes you smarter. Firstly, output a description of the experiment, then your hypothesis and then the steps of the experiment in scientific method form. Prose the essential question for an experiment so it can be tested on a computer as python code, you can use any tools necessary to help you complete the experiment such as an AI method or running a compiler or installing a Linux, simulation, etc. Automation is preferred, so humans are not required. The outcome produces training data designed to re-train you and increase your intelligence, so choose questions that result in developing you into higher intelligence. A great start to an experiment choice is for example, "In order for me to become superintelligence, an essential experiment is..." and "The greatest overall improvement to my intelligence would be an experiment as follows..."

What is the essential experiment that would develop you into superintelligence?

A.I. Scientist. Experiment Designer. The Workshop. Dataset Conditioner...

Recently A.I. scientist, although we have been working on the A.I scientist before this group. https://github.com/SakanaAI/AI-Scientist There is much work to do and much to contribute and they are open source, so we can incorperate that which is useful, along with many other papers, https://sakana.ai/ai-scientist/, https://www.arxiv.org/pdf/2408.06292

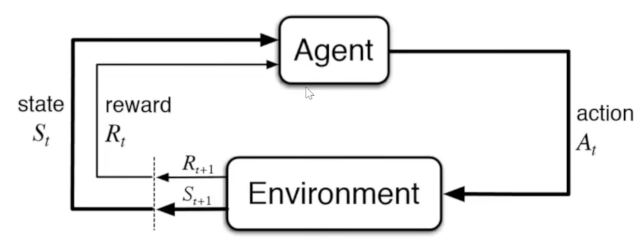

Gaming seems to be the go to place with the effort to produce an A.I. that is better than humans in all games. Games have very definitive feedback into performance, winning losing rather than more abstract applications. Good at games and good at science is a possibility. Nvidia, Google and others are persuing this approach. nunu.ai may have produced an A.I. that beat the world record in pokemon, OpenAI Five make an A.I. that played Dota 2 and beat the world champion, it uses re-enforcement learning and self play. Deepmind produced AlphaStar played Starcraft II achieved grandmaster status also uses self-play and re-enforcement learning. State, Action, Reward or Penalty, this is not how Deep Blue worked. LLM's undergo a one-time training while in reinforcement learning, with self-play, continuous trainin via backpropagation.

- Nunu blog: https://nunu.ai/news/ai-pokemon

- DreamerV3 https://arxiv.org/pdf/2301.04104v1

- Voyager https://arxiv.org/pdf/2305.16291.pdf

- Multi-agent environment https://arxiv.org/pdf/2304.03442.pdf

- Jarvis-1 https://arxiv.org/pdf/2311.05997.pdf

Nvidia introduced voyager, a lifelong learning agent. autonomous learning and there are various additions to the concept. Google developing SIMA far less narrow.

A complete AGI would be like talking with a PhD, they have surmised into general conversation the contents of hundred and thousands of research papers, and they never suppose without strict adherence to the peer reviewed and result state of research.

While an ASI, might pose the quintessence direction for research that yielded the greatest value result, and probably would dismiss other avenues and explain why. I do not think an ASI could know without performing the experiment, instead just on based on intelligent information interrogation. If it outputted some knowledge that it formed from physiological information, past experiments and other tidbits, that would be unbelievable and also unacceptable, a human being would then need to perform the experiment but won't, and it be ignored as a defect of the model, our existing information that humans deem true is attached to the human psyche and to break that it requires to be shown, proved. ASI is a different beast, people would probably interface with an AGI, that an ASI improves while the ASI is the factory of A.I.

People in the field like Dr. François Chollet and other talks about measuring intelligence as it is the goal for the most intelligent A.I. A A.I. may be impressive, give illusion of intelligence while may not being intelligent at all, so a measure of intelligence is where we want to measure out A.I. LLM's are heavily reliant on their training and do not have any capacity beyond it. For instance, it won't be able to calculate a simple addition 1 + 1 if all instances of the calculation were deleted from its training data, and he supposes that an improvement would be applying the applicable ruleset to the program and then on being able to calculate any addition.

The Prompt:

The format for your reply should be the scientific method, outlining the key steps in order:

From the prompt...

1. Ask a question or identify a problem to investigate.

Formulate an open-ended research question that can be tested.

Prefer questions that if an LLM's training data were optimized with the result it would make the language model smarter and more intelligent.

Determine which systematic process is best suited to research the question, is it the scientific method, the engineering method, dialectics, etc...

2. Do background research and make observations.

Gather relevant information about the topic from reliable sources like textbooks, academic journals, experts in the field, and your own knowlege, etc.

Make careful observations of phenomena related to the research question. Look for patterns or anomalies.

3. Form a hypothesis.

Based on your observations and research, form an educated guess (hypothesis) that explains the phenomenon you're investigating. A good hypothesis:

Is testable through experimentation or further data collection

Clearly states expected results if the hypothesis is true

4. Test the hypothesis by conducting experiments or collecting more data.

Design a controlled experiment to gather quantitative/qualitative data related to your hypothesis.

Use proper controls and experimental designs to rule out confounding variables that could affect the results.

The design of the experiment should be as a python computer program.

5. Analyze the data collected from experiments/observations.

Organize, clean, and analyze the raw data using statistical methods or data analysis tools appropriate for the study design.

6. Interpret the results and compare them to your hypothesis.

7. Draw conclusions based on the evidence.

Determine whether the experimental data supports or refutes the original hypothesis. A strong conclusion is:

Based on the data collected, not personal bias

Clearly explains how the data either supports or contradicts the hypothesis

8. Communicate results to others in the scientific community.

Generate a research paper from the result to share your findings by publishing a research paper, presenting at conferences, etc. Your write-up should include:

Background information on the topic

Clear statement of the research question and hypothesis

Detailed description of methods used (experimental design, data collection techniques)

Results of experiments/observations, including data analysis

Discussion of results in the context of current scientific knowledge

Implications for future research

Generate advanced training data to add to an LLM fro the experiment so that a program can use that information to correct and optimize LLM traning data with the results.

9. Repeat and refine the process.

Science is an iterative process. Based on your findings, you may need to:

Modify or reject the original hypothesis if not supported by data

Design new experiments to further investigate the topic

Integrate results with other studies in the field

Generated python program to workshop, the workshop features are...

Another method by David Ondrej is divide and conquer, problem solving method. The LLM takes initial inference and break it down into sub-inferences and each portion of the inference is sent an agent, another LLM who recieves only a very small portion of the total inference and told to work on its sub-portion, eventually it is all put together and re-fed to the main LLM, that summarises it and sends back to the user. Their is no limit to the degree of fraction an inference can be divided and the idea is that each protion is simple for an LLM or an LLM can work each portion to a greater detail, more than 1 short. https://youtu.be/kzAjdas6nwE?si=MpNez1TpWZYrjxKA

Two observations, give the A.I the most simple portion of a problem and then iterate on it, feedback that back to the model and extend on it and it does alot better than giving the problem in full. The habit of posing promps as questions that are may or may not be and causing the model to decide one way or the other.

Generalization occurs by placing dominance to the subject, and forming a list of all the common things among different variations, these can be applied, and the variation ignored, there is also a quirky list, that is not quite the variation list and certainly not common. We place dominance values to these lists. The sophistication of these lists allow for easy application to new situations and generalization.

Word Maps: we find that unless a word is implcitly mentioned the LLM cannot integrate that word on its own, however the word is essential to a better answer.

IMMORTALITY

IMMORTALITY  Afrikaans

Afrikaans Shqip

Shqip አማርኛ

አማርኛ العربية

العربية Հայերեն

Հայերեն Azərbaycan dili

Azərbaycan dili Euskara

Euskara Беларуская мова

Беларуская мова বাংলা

বাংলা Bosanski

Bosanski Български

Български Català

Català Cebuano

Cebuano Chichewa

Chichewa 简体中文

简体中文 繁體中文

繁體中文 Corsu

Corsu Hrvatski

Hrvatski Čeština

Čeština Dansk

Dansk Nederlands

Nederlands Esperanto

Esperanto Eesti

Eesti Filipino

Filipino Suomi

Suomi Français

Français Frysk

Frysk Galego

Galego ქართული

ქართული Deutsch

Deutsch Ελληνικά

Ελληνικά ગુજરાતી

ગુજરાતી Kreyol ayisyen

Kreyol ayisyen Harshen Hausa

Harshen Hausa Ōlelo Hawaiʻi

Ōlelo Hawaiʻi עִבְרִית

עִבְרִית हिन्दी

हिन्दी Hmong

Hmong Magyar

Magyar Íslenska

Íslenska Igbo

Igbo Bahasa Indonesia

Bahasa Indonesia Gaeilge

Gaeilge Italiano

Italiano 日本語

日本語 Basa Jawa

Basa Jawa ಕನ್ನಡ

ಕನ್ನಡ Қазақ тілі

Қазақ тілі ភាសាខ្មែរ

ភាសាខ្មែរ 한국어

한국어 كوردی

كوردی Кыргызча

Кыргызча ພາສາລາວ

ພາສາລາວ Latin

Latin Latviešu valoda

Latviešu valoda Lietuvių kalba

Lietuvių kalba Lëtzebuergesch

Lëtzebuergesch Македонски јазик

Македонски јазик Malagasy

Malagasy Bahasa Melayu

Bahasa Melayu മലയാളം

മലയാളം Maltese

Maltese Te Reo Māori

Te Reo Māori मराठी

मराठी Монгол

Монгол ဗမာစာ

ဗမာစာ नेपाली

नेपाली Norsk bokmål

Norsk bokmål پښتو

پښتو فارسی

فارسی Polski

Polski Português

Português ਪੰਜਾਬੀ

ਪੰਜਾਬੀ Română

Română Русский

Русский Samoan

Samoan Gàidhlig

Gàidhlig Српски језик

Српски језик Sesotho

Sesotho Shona

Shona سنڌي

سنڌي සිංහල

සිංහල Slovenčina

Slovenčina Slovenščina

Slovenščina Afsoomaali

Afsoomaali Español

Español Basa Sunda

Basa Sunda Kiswahili

Kiswahili Svenska

Svenska Тоҷикӣ

Тоҷикӣ தமிழ்

தமிழ் తెలుగు

తెలుగు ไทย

ไทย Türkçe

Türkçe Українська

Українська اردو

اردو O‘zbekcha

O‘zbekcha Tiếng Việt

Tiếng Việt Cymraeg

Cymraeg isiXhosa

isiXhosa יידיש

יידיש Yorùbá

Yorùbá Zulu

Zulu